Researchers have been deciphering brain activity for years. With the help of AI, they have used brain scans to determine which words people are listening to, or help reproduce the images that participants have gazed at.

When it comes to music, however, even though it is central to human experience, the neural language that underpins it remained mysterious.

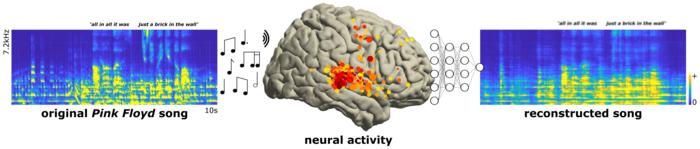

Now a major advance in understanding the ‘neural substrate’ of music has come from a study published in the journal PLOS Biology in which AI was used to recreate a piece of music after participants listened to Another Brick in the Wall, Part 1, from Pink Floyd’s 1979 album, The Wall. The haunting and eerie results are, says the team, “the first recognizable song reconstructed from direct brain recordings.”

The work dates back a decade when, the chords of the track filled the surgery suite, neuroscientists at Albany Medical Center in New York diligently recorded the activity of electrodes on the brains of 29 patients to monitor their epilepsy as they underwent epilepsy surgery. Unlike electrical signals picked from the scalp, using a method called EEG, those from the electrodes placed directly on the brain are much richer and more detailed.

Of the brain activity recorded from 2,668 electrodes, they found activity at 347 related to the music, mostly located in three regions: the Superior Temporal Gyrus, the Sensory-Motor Cortex, and the Inferior Frontal Gyrus.

The team at the University of California, Berkeley, which included Robert Knight, a neurologist and UC Berkeley professor of psychology, and postdoctoral fellow Ludovic Bellier, then trained an AI computer model on brain data from the electrodes as the participants listened to about 90 percent of the Pink Floyd song.

The remaining 10 percent—a 15-second clip from the middle of the track—was left out of the training data and the AI was used to recreate this chunk of music from the brain activity based on patterns the AI had learned. You can listen to the original song and the inferred music using models fed by 61 electrodes from a single patient as well as the inferred music using models fed with all 347 significant electrodes from all 29 patients.

Though muffled, as if playing underwater, the phrase ‘it’s just another brick in the wall’ was recognisable, with both melody and rhythms reasonably intact, though whether you could identify the song from the reconstruction alone, if you had not just heard the excerpt, is debatable.

“This study is exciting,” comments Jessica Grahn, a cognitive neuroscientist who studies music at Western University, in London, Ontario. “It’s a real technical achievement to acquire these data, and to be able to reproduce such a complex auditory signal.”

“Song information is incredibly rich–there’s a reason you can’t just sing a tune into Google and have it identify the song for you, despite years of research into analysing auditory signals,” she adds.

Previous research has linked different parts of the brain to perceiving elements of music, including pitch, rhythm, and the texture of sound, called timbre.

The new study found that both hemispheres play a role but, in line with earlier work, the right hemisphere is engaged more with music than the left.

A brain subregion that underlies rhythm perception was revealed. Called the superior temporal gyrus, located in the temporal lobe (which is on both sides of the brain, extending from the temple to behind the ears), seemed to be heavily involved in musical perception, with a particular subregion connected to rhythm, such as Pink Floyd’s thrumming guitar.

Analysis of song elements revealed one region in the gyrus that represents a beat, in this case the guitar rhythm in the Pink Floyd track. “They didn’t have electrodes in many of the other areas we traditionally see responding to rhythm, but it does represent a new discovery to find music engages this part of the superior temporal gyrus,” adds Grahn.

These findings could have implications for developing prosthetic devices that help improve the perception of prosody, the rhythm and melody of speech. Past research has shown that computer modelling can decode and reconstruct speech, but a predictive model for music that includes elements such as pitch, melody, harmony, and rhythm, as well as different regions of the brain’s sound-processing network, was lacking.

Such recordings from electrodes on the brain surface could help reproduce the musicality of speech that’s missing from today’s robotic reconstructions. Knight comments: “As this whole field of brain machine interfaces progresses, this gives you a way to add musicality to future brain implants for people who need it, someone who’s got ALS or some other disabling neurological or developmental disorder compromising speech.”

Grahn added: “From my perspective, as a rhythm researcher, it will be exciting to determine whether this area is communicating with motor brain regions, which control movement, helping us define the brain network that causes us to move to music.

“My own lab specializes in the links between music and movement, and how music can help people with movement disorders, such as Parkinson’s disease, so I am personally excited about how the techniques in this study will help us understand how different brain areas work together to produce our responses to music, from dancing to emotions to memories.”

The findings also relate to the new exhibition at the Science Museum that opens on Thursday 19 October. “Visitors to Turn It Up: The power of music will hear from scientists studying whether music can really make our heart beat faster, and how it can transport us back in time,” said Emily Scott-Dearing, Guest Curator. “They’ll see the brain sensors and skin monitors used by scientists to investigate music’s extraordinary effects on our bodies and minds. They can even test their dance moves on motion-capture sensors used to study how we move to music and synchronise to its beat.”

Fellow curator Steven Leech of the Science and Industry Museum, Manchester, adds: “Our exhibition is packed with music and musicians, including Haile the robot drummer who was designed by researchers at Georgia Tech University to collaborate with human musicians, and the next generation robot musician Shimon who was trained using over 50,000 songs to compose songs and write lyrics using a type of artificial intelligence known as deep learning.”

“We challenge our visitors to see if they can tell AI-from human-composed music and share cutting-edge examples of how AI is being used deliver daily doses of music to improve the lives of people dealing with dementia.”

He adds: ‘We are excited to bring Turn It Up from Manchester to London and bring to life the mystery of music and the incredible ways that it impacts all aspects of our lives. Although we know that some people may lack confidence when making music, through this exhibition we hope visitors will discover that we really are all musical.’

If you would like to find out more about how music shapes our lives, visit Turn It Up: The power of music at the Science Museum from 19 October 2023.