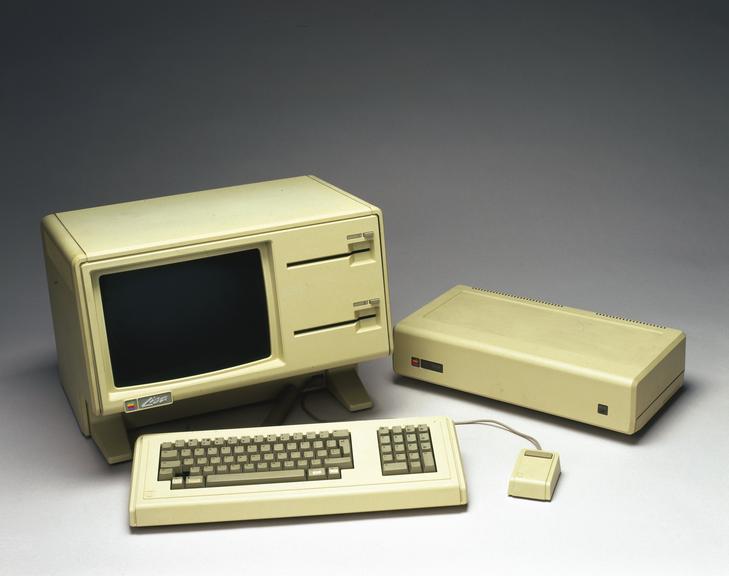

The limitations of a computer are usually thought of in terms of hardware, memory and calculations per second. However, a more fundamental snag arises because the numbers they rely on are a poor representation of reality, according to a paper published this morning.

The new study is by Professor Peter Coveney of UCL, who is speaking at an event on the future of quantum computing, at the Science Museum tomorrow night, to mark the opening of our Top Secret exhibition, together with Professor Bruce Boghosian and Dr. Hongyan Wang at Tufts University, Boston, United States (Dr Wang now works at Facebook in Seattle).

‘Our work shows that the behaviour of the real world is richer than any digital computer can capture,’ says Prof Coveney. ‘Computers can’t simulate everything’.

Their paper, published in the journal Advanced Theory and Simulations, shows that computers cannot reliably reproduce the behaviour of ‘chaotic systems’, which are commonplace, from collisions of molecules to the turbulence in weather, climate, blood flow, fusion reactors and more. ‘Chaos is much more widespread than many people may realise,’ comments Prof Coveney.

Their study builds on a well-known insight from Edward Lorenz of MIT who, in 1961, wished to repeat one of his weather simulations using a simple computer model but got quite different results because of a tiny rounding error in the numbers he fed into the computer.

Lorenz had glimpsed what today is known as the ‘butterfly effect,’ where a tiny change in the initial data has huge knock-on effects, a signature of chaos. With this in mind, Boghosian, Coveney and Wang investigated the implications of the limited range of numbers used by digital computers.

First, digital computers only use a subset of the rational numbers, when there are infinitely more so-called irrational numbers.

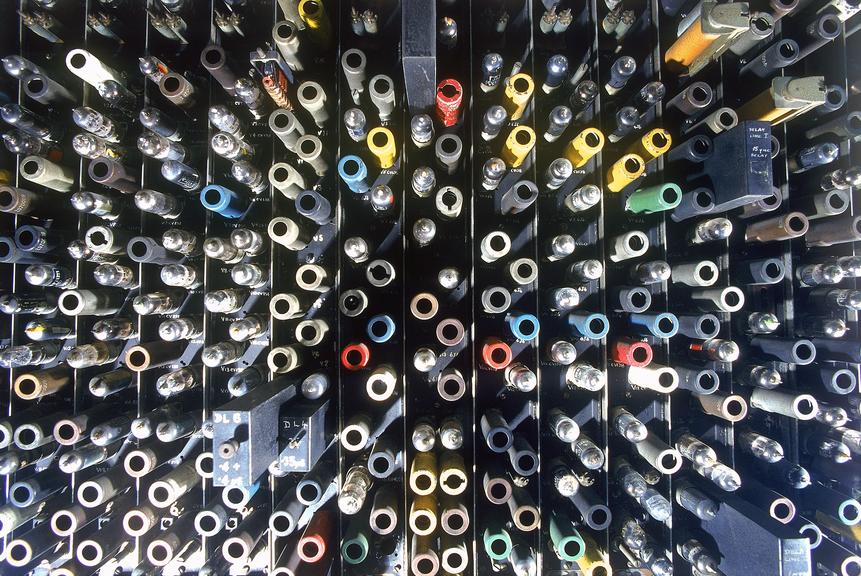

Secondly, digital computers handle four billion rational numbers that range from plus to minus infinity, the so-called ‘single-precision IEEE floating-point numbers’, which refers to a technical standard for floating-point arithmetic established by the Institute of Electrical and Electronics Engineers in the 1950s.

Moreover, these four billion numbers are exponentially distributed as decreasing powers of 2, so there are as many between 0.125 and 0.25, as there are between 0.25 and 0.5, as there are between 0.5 and 1. ‘It’s incredible that these floating-point numbers are capable of capturing as much of reality as they do since they are highly non-uniformly distributed,’ says Prof Coveney.

The richness of the real world is entrained with irrational numbers which cannot be represented on any digital computer (‘and will not be representable on a quantum computer either’, he adds). ‘For Lorenz, it was a very small change in the last few decimal places in the numbers used to start a simulation that caused his diverging results,’ he says. ‘What neither he nor others realised, and is highlighted in our new work, is that any such finite (rational) initial condition describes a behaviour which may be statistically highly unrepresentative’.

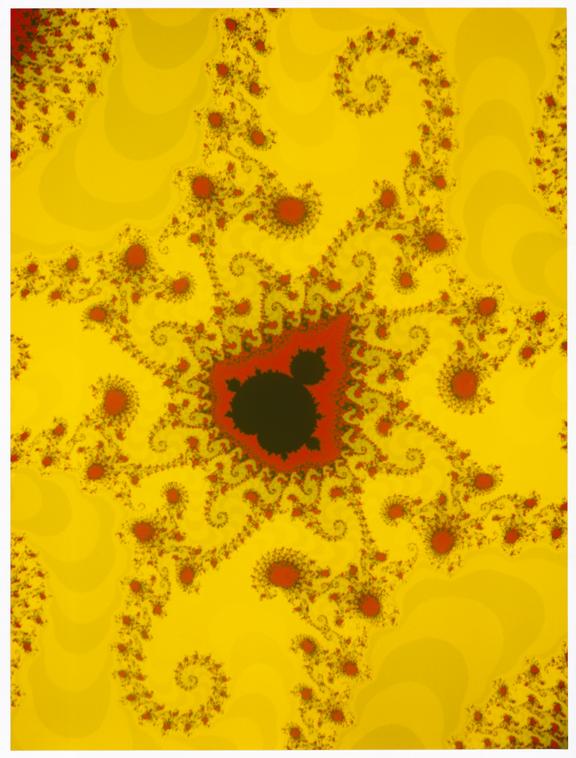

To investigate, Prof Coveney and his colleagues used a simple mathematical recipe for chaos, called the generalised Bernoulli map, where real numbers between zero and 1 are multiplied repeatedly by a constant number greater than one at each iteration, with the catch that if they exceed the value of 1, the remainder (modulo 1) is used in the next iteration.

Apparently random locations crop up between 0 and 1, despite the simplicity of the recipe, which is the hallmark of chaos. But when they compared the known mathematical reality of the generalised Bernoulli map to what digital computers predict, it produced two surprises for them.

Sometimes, when the constant multiplier is an even integer, the predicted behaviour is ‘completely wrong,’ says Prof Coveney. For more typical values of the constant, they found that although behaviour looks correct, it can often be wrong, producing errors of up to 15%.

Though a simple example, the generalised Bernoulli map is mathematically equivalent to many other dynamical systems – that is those whose behaviour changes with time – encountered in physics, chemistry, biology and engineering.

Already aware that chaos can make predictions unreliable, scientists take account of this by performing multiple calculations – so-called ‘ensembles’ with slightly different starting conditions, looking at the average outcomes. But the new work shows that this approach, used to predict climate, chemical reactions and more, may contain sizeable errors. ‘These computer-based simulations must now be carefully scrutinised,’ says Prof Coveney.

Moreover, Prof Coveney points out that the use of data from chaotic simulations used to construct a well-known kind of AI, called machine learning, ‘may likewise omit some of the complexity of the real world and needs to be treated with scepticism; attempts to train such algorithms on the basis of simulation data from chaotic dynamical systems are liable, on occasion, to produce “artificial stupidity”.

Scientists need to take a careful look at how far their forecasting and modelling with digital computers do indeed deviate from reality, he adds. ‘We have shown, for perhaps the simplest possible case, that the deviations are neither obvious nor small, and do not disappear as numerical precision is increased.’

More work is required to examine the extent to which what he calls the ‘pathology in the floating-point arithmetic’ will cause problems in everyday computational science and modelling and if errors are found, how to correct them.