Scientists have talked for decades about how, in theory at least, quantum computers could revolutionise science: they offer a way to crack ‘unbreakable codes’, model the way that drugs work in the body, and answer many questions that lie beyond the capability of current ‘classical’ computers in our smartphones and desktops.

The reality has lagged the hype but in recent years major manufacturers of traditional ‘classical’ computing have entered the race to make commercial quantum machines, from IBM, Google, Microsoft and now Intel, which thinks the answer might lie in extending the traditional semiconductor technology on which everyday computers depend.

The difference in the way quantum and the familiar ‘classical’ computers work is profound. The latter encode information in bits, which can take the value of 1 or 0 and you can think of them like a currency of on/off switches that control how a computer works.

Quantum computers, on the other hand, are based on ‘qubits’, which can represent both a 1 and a 0 at the same time, so if a 1 and 0 are the heads and tails of a coin, a qubit is akin to a spinning coin and, like the real thing, are hard to maintain. The fates of qubits can also be correlated by a process called ‘entanglement’, for instance by bathing them in a delicate magnetic field.

Currently, however, there is no consensus on the best way to make a quantum computer, which vary in type depending on the types of qubits, how you manipulate them, and how you get them to interact with one another.

Some quantum computers rely on superconductors – materials that have zero resistance to the flow of electricity at low temperatures – or the spin of a single atom, or on charged atoms (ions), or the spin of protons, even atomic defects in diamonds.

Whatever the approach one of the key hurdles lies in achieving uniformity and high fidelity across large numbers of qubits, which is a key requirement for fault-tolerant quantum systems.

Intel’s approach is to harness the extraordinary precision used every day to put billions of transistors on silicon chips. By one estimate there will be more transistors on the planet than human cells by next year, making them the most ubiquitous objects made by humanity.

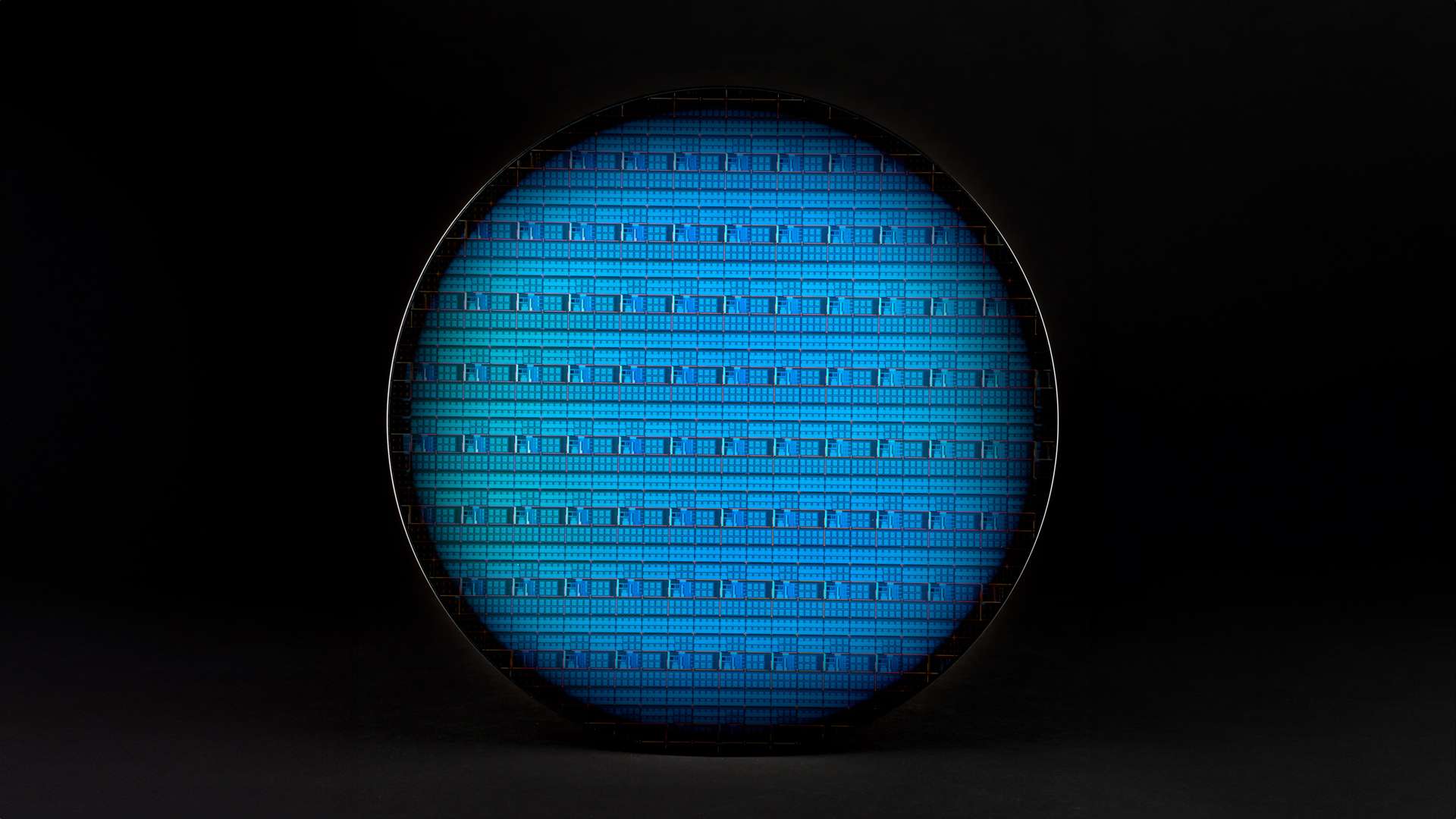

Recently the journal Nature published a paper by a team led by Jim Clarke at Intel that unveiled a 300mm wafer of silicon – around a foot across, the biggest used by the industry – that demonstrated uniform, high-fidelity qubits made with manufacturing processes used for classical microchips.

‘Quantum computing is the computer technology of the future’, Dr. Clarke told me. ‘If this decade is the decade of AI, the next is the decade of quantum.’

His team harnessed what are called ‘spin qubits’, where the information is stored in an electron in the wafer, which is held at a temperature 250 times colder than deep space to ensure that the qubits are not disturbed by the atomic jiggling caused by heat energy. The spin of this single electron in a magnetic field can either be in the spin down (low energy) or in the spin up (high energy) state – akin to zeros and ones in a classical computer.

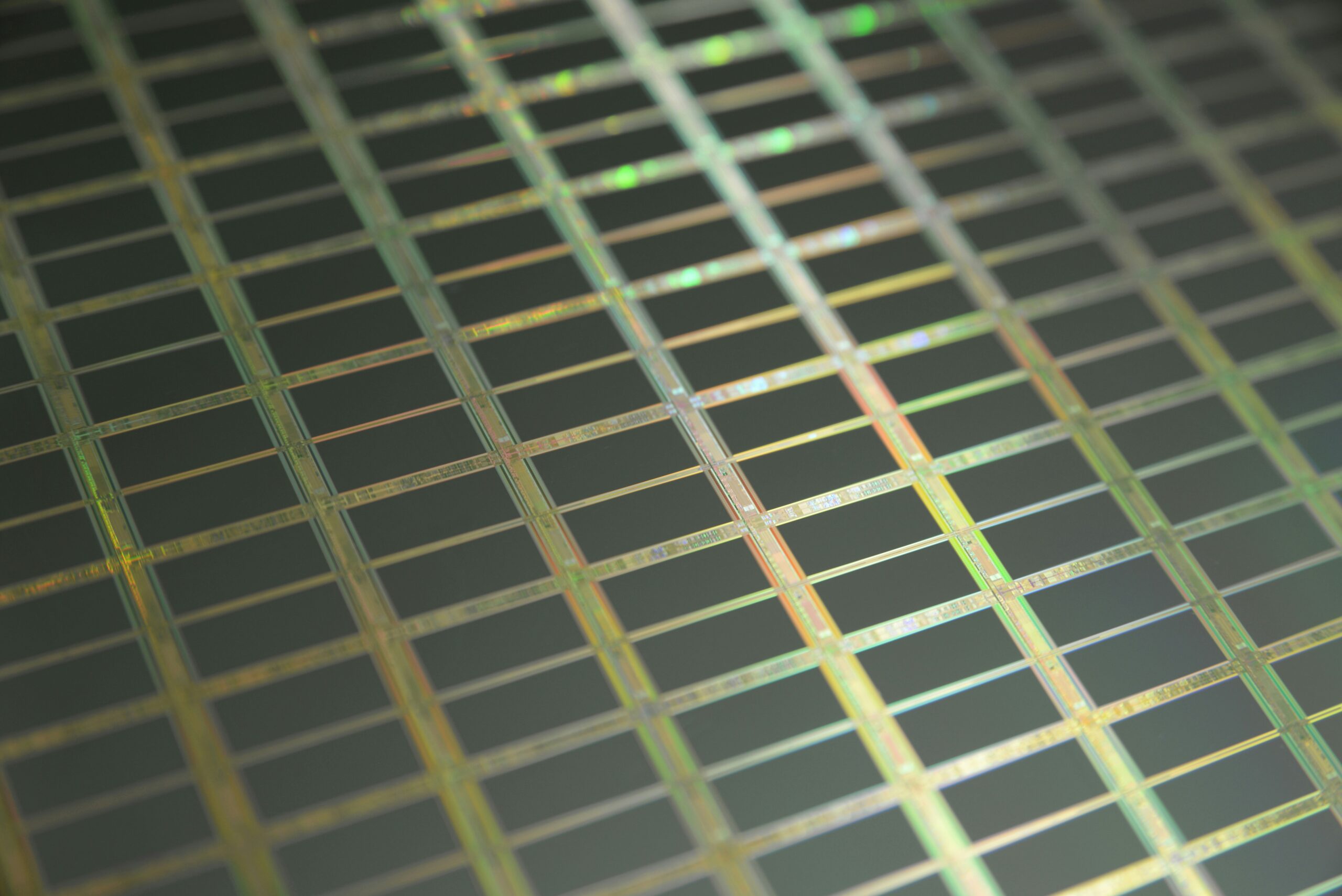

Each qubit is essentially a single electron transistor on the wafer, which allows Intel to fabricate it at its $50 billion plant in Hillsboro, Oregon, which is so big that 22,000 people work on the site. To create their quantum chip, they used the production line for complementary metal oxide semiconductor (CMOS) chips, which contain billions of transistors per chip.

Being the size of a transistor, the spin qubits are up to one million times smaller than other qubit types, measuring 50-100 billionths of a metre (nanometres) squared, potentially allowing for efficient scaling, so that more complex quantum computers can be crammed into a single chip of the same size.

What is remarkable is that if you add extra qubits, the processing power scales geometrically: a quantum computer with two qubits could run four calculations at the same time, while a 1,000-qubit device could process more simultaneous calculations than there are particles in the known universe.

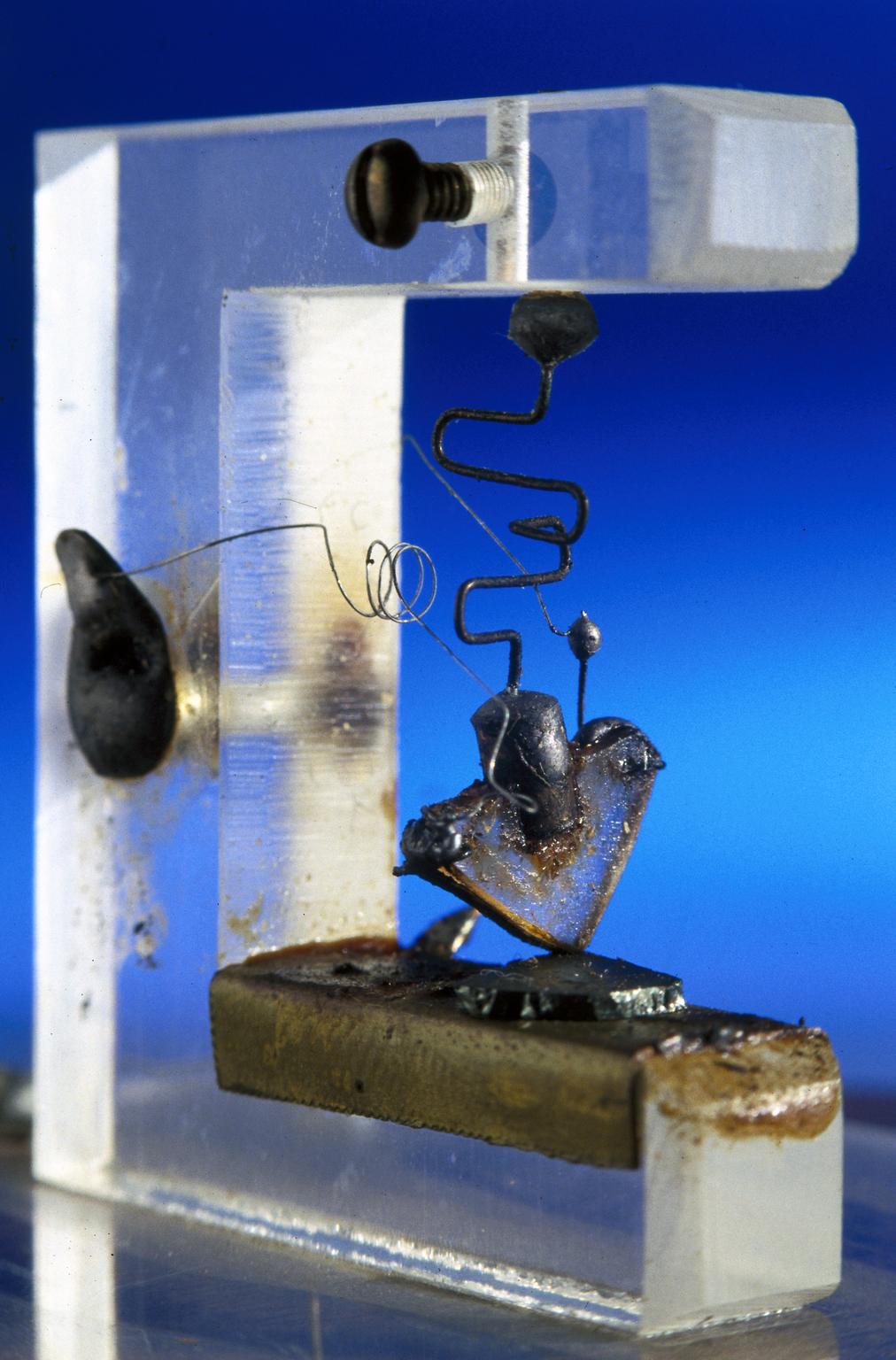

‘We are probably going to need a million qubits to do something that is going to change the world,’ said Dr. Clarke. Though it took 42 years to go from the first transistor in 1947 to the first million transistor processor in 1989, he believes that by exploiting existing infrastructure used to make microchips, they can speed up the development of mega qubit architectures.

The qubits created by Intel rely on an elaboration of the architecture of a conventional silicon microchip transistor, using what are called ‘barrier gates’ – where a gate is a kind of switch – to create a little island of electrons called a ‘quantum dot’.

Another microscopic structure called a ‘plunger gate’ can control the flow of electrons through the dot, so that only one remains – it is the spin of this solitary electron that is the qubit. In all, manufacture only deviates by a couple of steps from that used to make a usual microchip, said Dr. Clarke.

On the 300mm silicon wafer, the team made hundreds of copies of the same quantum chip, where each of these ‘Tunnel Falls’ chips consists of a line of 12 quantum dots. However, the aim eventually would a wafer full of chips with each chip containing up to a million qubits.

One big issue is decoherence, where qubits lose their useful ambiguity and become humdrum 1s and 0s. Even when operated in refrigerators near absolute zero (minus 273 degrees Celsius), current quantum computers can maintain error-free coherence for only a tiny fraction of a second.

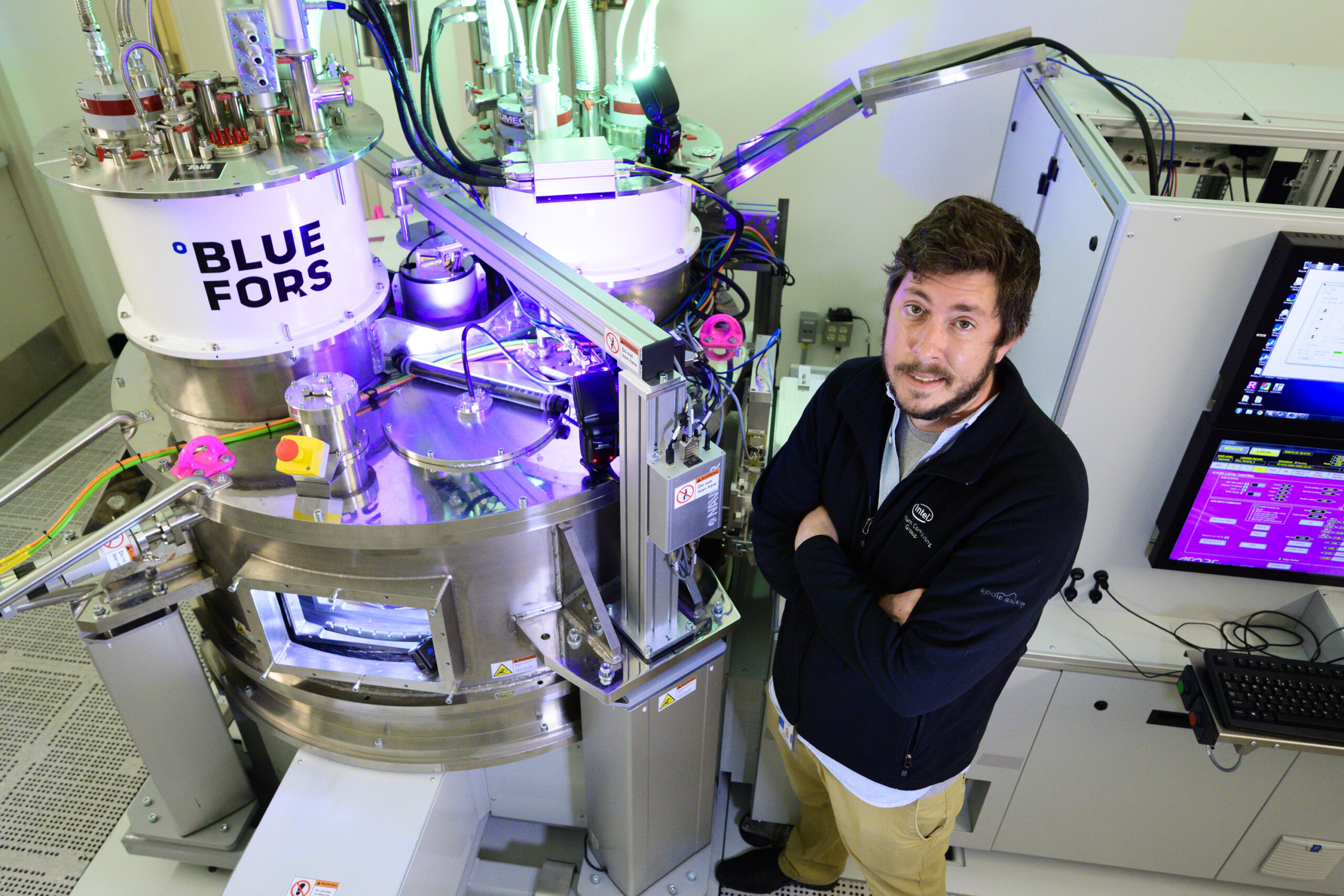

Intel’s quantum hardware team developed a ‘cryoprober’, a room full of equipment at low temperatures which lets them gather performance data across the giant wafer to explore this key issue with quantum computing. ‘We can get a whole year’s worth of data with this tool,’ said Dr. Clarke, which in turn will enable them to pick the best chip for further testing and to hone production methods.

Reassuringly, the researchers found that single-electron devices achieved 99.9% gate fidelity, the highest achieved with this approach. The next step is to move from lines of 12 qubits to two dimensional arrays of many more. ‘It is really exciting for us,’ said Dr. Clarke.

Researchers at the Universities of Melbourne and Manchester, led by Prof Richard Curry, recently found a way to make ultra purified silicon that can sustain coherence for longer and brings high performance quantum computers a big step closer. Dr. Clarke said that his Intel team read the paper ‘the minute it came out’ and, if the method can be scaled up commercially, ‘it could be a game changer.’